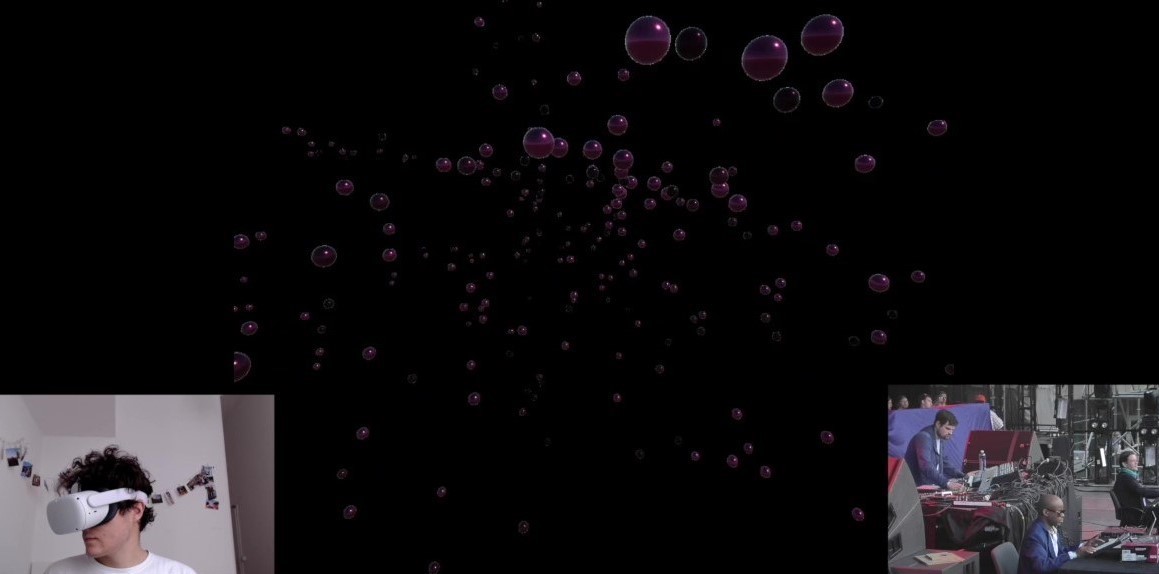

Audio Visualization application in VR developed for Oculus Quest 2 headsets

Project Overview

A VR application developed in Unity3D that immenses the user in a reactive 3D particle flowfield. Prototyped to be used in a performance context, Noise Flowfield is a 3D audio visualization application that reacts to sounds in the environment.

My contributions

As I have work experience with Unity3D development using XR Interaction Toolkit, I took over the responsibility of development. Although I had never developed for a VR device before, the development process was quite smooth since Oculus Quest 2 has an Android operating system.

Other team members: Elisabeth Oswald (TU Berlin), Anna Petrouffa (TU Berlin), Rhea Widmer (TU Berlin), Lorenzo Cocchia (Politecnico di Milano)

Concept

This prototype was developed in the early stages of the 6-months long research project "Future of Performing Arts with XR Technologies" by TU Berlin and Empiria Theatre Zagreb. The goal of the research was the development of an interaction concept using XR to create experiences for artistic performance. Due to the unsuitability of Oculus VR Headsets in a performance context, this prototype was not developed further.

Behind the scenes: Technicalities

The particles in the scene react to the sounds in the environment: Extracting the microphone input, the amplitude of the signal is used to compute the speed and the rotation of the particles by linear interpolation. Additionally, the spectrum is divided into 8 bands that are used to determine the color and size of the particles.

Outlook

Although this prototype was left in early stages for performance-specific reasons, I believe that it still has strong potential for performance arts. An idea would be to use motion capture to create a real-time avatar of dancers. Inspired by Merce Cunningham and his use of the software Life Forms, that portrayed outlines of the human figure in a three-dimensional space, motion capture brings another dimension to the discussion of what digital technologies can add to performances.